To attain superior performance in AI ML workloads, harnessing the power of Cloud GPUs is now a must-have strategy. Many AI workloads, especially those with complex neural networks, demand enormous computational power. It is here that Cloud GPUs play a crucial role. Their power ensures machine learning workloads can process enormous datasets while improving model training.

Employing cloud GPU technology, AI/ML workloads can be scaled easily with minimal costs and better efficiency. Cloud GPU services will also become increasingly important to serve AI inference workloads with a faster timeline for decision-making and much higher prediction accuracy in real-time applications involving AI and ML environments.

In this blog, we’re going to talk about something much more crucial than AI itself. We’re referring to GPUs that make possible LLMs and how to best utilize the computing power from GPUs, so here’s everything you need to know about GPUs or cloud GPUs in detail.

Table Of Content

Impact of LLMs on Workloads

To make sure that LLMs, i.e., Large Language Models, work efficiently, they’re supplied with massive amounts of training datasets. This activity is commonly referred to as “model training.” LLMs and FM, i.e., Foundational Models obtain “reasoning” through model training and, most of the time, their training datasets are public datasets like Common Crawl. Nonetheless, that doesn’t mean an application cannot train on private datasets. LLMs trained on private datasets require the information to be “parameterized,” which ensures it is in a format the model can use. Then each of these parameters is given a weight, encapsulating how much of it should influence the results from the role.

Due to the high computational needs of this process, GPUs are important. But,

Why Leverage Cloud GPUs (Graphical Processing Units)?

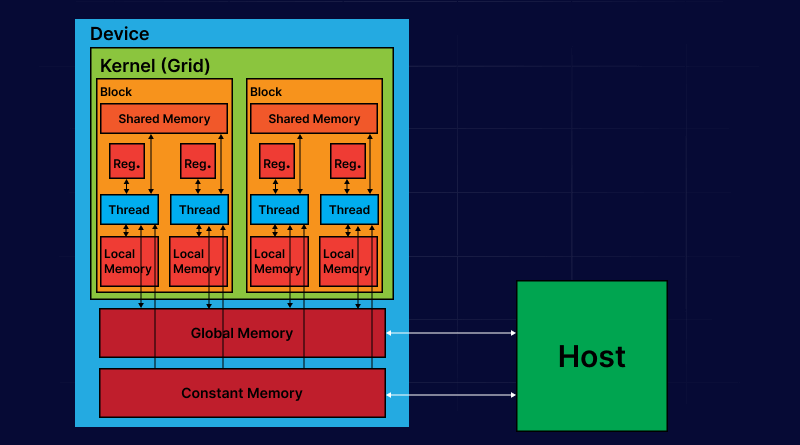

Originally designed for graphics on a computer, GPUs make sure that the resources are allocated in a parallelized fashion and therefore, they’ve found a place in AI developments.

To put this into perspective just a little bit, here are a few numbers:

- OpenAI is believed to have used 25,000 NVIDIA A100 GPUs to train their 1.76T parameter GPT-4 model for over 100 days straight.

- Meta reportedly took 1.7 million GPU hours to train its 70B parameter Llama 2 model; that’s about the equivalent of 10,000 GPUs running for over seven weeks.

- Meta also announced it would use an equivalent of 600,000 NVIDIA H100s to train its upcoming Llama 3 model.

It would seem that the Big 4 and all other tech giants have pushed AI models to a whole new innovation level. Give this a few years, the days of free access to any training model by just anyone are already numbered. Most models already are open-source and now let’s talk about GPUs and the impact of GPU cloud on modern workloads. Experts believe that the cloud GPUs are going to be the key to the commoditization of AI. Imagine an era where kids snap out AI models for their toys!

There is much more to how Cloud GPUs play a pivotal role in the entire development.

Why AI ML Workloads Need High Performance?

Due to the intricate nature and complex data sets, AI and ML workloads depend on powerful computational capabilities. AI workloads that contain DL (Deep Learning) models require processing enormous datasets in order to develop models that are capable of delivering results with high accuracy.

Likewise, machine learning workloads require huge computations at scales to develop better algorithms and upgrade the models’ accuracy. Because AI/ML workloads are more data-intensive, the processing needs to happen at higher speeds. Harnessing Cloud GPUs is one of the most effective solutions offering essential computing capabilities to speed up model training as well as inference tasks. Through cloud computing, Cloud GPUs make AI inference workloads possible with real-time processing guaranteeing that AI and ML applications can really come up with quick and accurate decisions.

What are GPUs and GPU Performance?

GPUs, i.e., Graphics Processing Units are high-performance processors that are necessary for speeding up the management of huge datasets and complicated algorithmic tasks. As a result of these capabilities, GPUs are crucial elements in revolutionizing AL and ML technologies.

Now, GPU performance measures how efficiently and speedily can a GPU manage a complex computing workload. By implementing customized speed optimization techniques, it is possible to increase the performance of GPU. This results in accelerated model training, allow real-time inference, and superior handling of parallel computation tasks.

Cloud GPUs for Improved AI/ML Workload Performance

Cloud-based GPUs are specially created compute instances in cloud infrastructure. The entire configuration utilizes a hardware layout designed to take on an intense computing workload. This configuration is very different from any typical CPU-based system because GPUs are highly optimized for parallel processing.

That makes them also exceptionally well suited for taking on workloads that require great data crunching and heavy mathematical calculations. This inherent parallelism of GPUs makes them suitable to be used in analytics, GPU deep learning, computer-aided design, gaming, and image recognition.

Apart from offering much more computational power compared to central processing units, the benefit of using cloud GPUs is the prevention of physical installations that are required on local systems. With this configuration, a user benefits from the power of advanced resources of computation offered remotely by a cloud provider.

This simplifies the management of infrastructure as well as enables easy scalability that helps businesses use computing resources based on changing requirements.

GPU Role in Model Inference

Large foundation models post the training stage require continuous computation capabilities in order to work. It is referred to as “Inference.” Training of LLMs, i.e., Large Language Models, often requires significantly large clusters of interconnected GPUs operating for an extended period of time. Model inference, however, requires considerably less computational potential based on activation, therefore it is a crucial factor to consider.

Cloud GPUs vs Traditional GPUs

What distinguishes Cloud GPUs most strikingly NVIDIA GPUs, from other conventional GPUs is ease of use and price, among other things. Here are some of the main aspects that might make one stand out from another.

| Aspect | Cloud GPUs | Traditional GPUs |

| Cost Efficiency | Pay-as-you-go model, reducing initial investment costs | High upfront costs for hardware and ongoing maintenance |

| Scalability | Easily scalable, i.e., scale up or down on demand | Limited scalability, constrained by fixed hardware capacity |

| Flexibility | Access to varied configurations and software updates | Requires static setups and hardware adjustments |

| Maintenance | Managed by the provider, reducing in-house maintenance needs | Requires dedicated in-house management |

| Access | Accessible remotely, supporting distributed teams | Location-bound, limiting remote access |

Related: Know All About CPU & GPU

Maximize Computing Potential with MilesWeb’s Next-Level Cloud GPU

Unlock the full potential of your AI ML workloads with the emerging Cloud GPU hosting from MilesWeb. Dedicated GPU-based resources designed for superior performance yield outstanding performance computing on a managed basis at the most affordable price.

With dedicated GPU resources available to you, speed up complex computations, optimize models, and receive the results in the shortest time at your fingertips. Whether it is an AI model, high-end rendering, or a bulk data processing task, MilesWeb Cloud GPU hosting guarantees you unparalleled reliability and speed. Get ahead with high-quality, cost-effective cloud solutions to take your projects to a new level.

MilesWeb offers cutting-edge GPU cloud hosting with high-performance NVIDIA GPUs. Such GPUs are best suited for running ai ml workloads, rendering, and other resource-intensive applications. Therefore, it gives businesses ready access to the strong GPU infrastructure without the cost burdens of traditional servers at a startup or enterprise level by providing scalable resources, 24/7 support, and flexible pricing.

Whether a business is a startup or an enterprise, MilesWeb’s easy-to-manage Cloud GPU services give you flexibility and reliability for smooth uninterrupted performance. It is best for high-end GPU servers suitable for your needs.

FAQs

Distinguish between Cloud GPUs and traditional GPUs.

Traditionally, GPUs have been installed on local hardware devices, requiring significant upfront investment and major maintenance. They provide dedicated and predictable performance for resource-intensive applications. On the other hand, Cloud GPUs are flexible while traditional GPUs are best for stable, ongoing operations. Also, Cloud GPUs are remotely hosted, scalable, and follow a pay-as-you-go model offering high-performance computing solutions, and ideal for dynamic workloads.

Explain the benefits of utilizing Cloud GPUs for AI ML workloads.

Whether it is AI, machine learning, or big data analytics, they’re all accelerated by GPUs and deliver more rapid results. To the enterprise, these benefits of using GPU in the cloud, translate into quicker decision-making capability, at an increased pace than their competitors, and allow them to better engage in more extensive projects that might have otherwise been facing processing limitations.

Important factors to consider when selecting the right Cloud GPU for my ai ml workloads.

When selecting the right Cloud GPU for your ai ml workload it is important to consider several key components like total core count, total memory, memory clock speed, GPU clock speed, and AI-based hardware optimizations.

Explain the different performance benchmarks for each type of Cloud GPU.

Performance benchmarks for cloud GPUs will depend on the model of the GPU and the actual use case. For example, NVIDIA is arguably one of the best because it offers high performance for ai workloads and ml workloads.

What are the key data privacy concerns associated with Cloud GPUs?

Indirect exposure of sensitive information due to the storage of data outside the server makes it susceptible to unauthorized access and poses specific data privacy concerns in Cloud GPUs. It also entails risks in a shared environment if the isolation and security measures implemented are inappropriate. Hence, Cloud providers must follow data privacy regulations and ensure substantial encryption, access controls, and regular audits.