It’s a fact that just one-second delay in page load time results into 11% less page views, 16% reduction in customer satisfaction and 7% loss in conversions. And so think, if there is more than one-second delay, it could have a big impact on your ability of engaging visitors and bringing sales. These stats indicate that a fast website is a must for ranking well with Google as well as for increasing your bottom-line profits.

In 2006, as per the report by Amazon, when for every 100 milliseconds they speed up their website, they find 1% increase in the revenue (Source). Google then announced that page speed will be a compulsory factor for improving the rank of your website. After this, you might have found several case studies reflecting the benefits of a fast website. This guide also focuses on some important features that you or the developer can easily manage for improving your website performance and speed.

Let’s check out what are factors that influence your website performance and help to increase its speed and user experience:

Combine images using CSS sprites

Images combined into few possible files with CSS sprites helps in reducing the number of round-trips and time required for downloading other resources. It also helps in dropping request overhead and can trim down the total number of bytes downloaded by a web page.

Google’s explanation in details

Multiple image download leads to additional round trips similar to JavaScript and CSS. A site with several images can combine them into fewer output files for reducing latency.

PageSpeed recommendations:

Sprite images that are loaded together

Images that load on the same page and the ones that always load together should be combined. For example, a set of icons those load on every page need to be sprited. It may not be good to sprite dynamic images such as profile pictures that change with each pageview or other images that change frequently.

Sprite GIF and PNG images first

Lossless compression is used by GIF and PNG images and so they can be sprited without draining the quality of the resulting sprited image.

Sprite small images first

A fixed amount of request overhead is incurred by each request. The request overhead can dominate the time required for a browser to download small images. Combining small images helps in decreasing the overhead from one request per image to one request for the complete sprite.

Sprite cacheable images

When the images with long caching lifetimes are sprited, it indicates that image won’t be required to re-fetch once the browser caches it.

Minimize the amount of “empty space” in the sprited image

When the browser decompressed and decodes the image then only an image is displayed. The decoded representation size of the image is relative to the number of pixels in the image. So, there may not be a significant impact on the size of the image file due to the empty space in a sprited image but the memory usage of your page will increase due to a sprite with undisplayed pixels, resulting the browser to become less responsive.

Sprite images with similar color palettes

When an image with more than 256 colors is sprited, it can force the resulting sprite image to use the PNG true color type rather than the palette type, leading to increasing size of the resulting spirte. Combining images that share the same 256 color palette, generates optimal sprites. If you find some flexibility in the colors in your images, it is better to reduce the color palette to 256 colors of the resulting sprite.

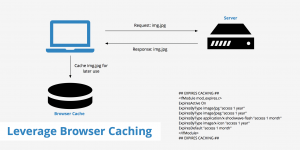

Leverage browser caching

Overview

It is possible to improve page load times by requesting the visitors to save and reuse the files included in your website. This results into:

- Decrease in the page load times for same visitors.

- Effective on websites that receive visitors for the same areas of the website.

- The ratio of Benefit-cost increases.

- Access needed.

Tips to set expiries:

Access plus 1 year – Truly static content (global CSS styles, logos, etc.)

Access plus 1 week – Everything else

What is browser caching?

Whenever a webpage is loaded by the browser, it needs to download all the web files to display the page properly. The web files include HTML, JavaScript, CSS and images.

There isn’t any problem with small files especially with a couple of kilobytes. Other pages might consist of several files which might be up to several megabytes large. For example: Twitter.com is 3MB+.

The issue is bifold. As the large files take high time for loading and it can be more tedious when there is slow internet connection (or a mobile device).

A separate request is made to the server. The server will require to do more work when there are more requests to it, leading to slow page speed.

Browser caching stores some of these files locally in the user’s browser. When the user visits your website for the first time, it will take the same time to load. But when the same user visits your website for the second time or refreshes it or browsers a different page on your website, he/she will already have some files he/she requires locally.

This indicates that the quantity of data that the user’s browser requires to download is less and only few requests are needed to be made to the server. This results in decreased load times.

Importance of Browser Caching

Browser caching is important as it cuts down the load on your web server that finally results into decrease in load time for your visitors.

Way to Leverage Browser Caching

Simply edit your HTTP headers for setting expiry times for certain types of files and this is the way you enable leverage browser caching.

Configuring Apache to Serve the Appropriate Headers

The .htaccess file is located in the root of your domain. It is a hidden file but should be viewable in FTP clients like CORE or FileZilla. Editing of .htaccess files can be done in notepad or any basic editor.

We will set our caching parameters in this file to help the browser understand the types of files to cache as in the below example:

## EXPIRES CACHING ## <IfModule mod_expires.c> ExpiresActive On ExpiresByType image/jpg "access plus 1 year" ExpiresByType image/jpeg "access plus 1 year" ExpiresByType image/gif "access plus 1 year" ExpiresByType image/png "access plus 1 year" ExpiresByType text/css "access plus 1 month" ExpiresByType application/pdf "access plus 1 month" ExpiresByType text/x-javascript "access plus 1 month" ExpiresByType application/x-shockwave-flash "access plus 1 month" ExpiresByType image/x-icon "access plus 1 year" ExpiresDefault "access plus 2 days" </IfModule> ## EXPIRES CACHING ##

It is possible to set different expiry times depending on the files of your website. It is possible to set an earlier expiry time on some specific files (ie. css files) that are updated more frequently.

When you complete everything, don’t save the file as a .txt but as it is.

In case, you are using any CMS, there might be cache extensions or plugins available.

Recommendations

- For all static resources, you need to be insistent with your caching.

- There needs to be a minimum of one month’s expiry (recommended: access plus 1 year)

- It isn’t recommended to set your cache in advance for more than a year.

Be careful

Be careful when browser caching is enabled as if the parameters set are too lengthy on certain files, users won’t be getting the fresh version of your website after the site is updated.

This is important when you work with a designer to make changes to your website – the designer might have updated your website after the changes but you aren’t able to see those as the elements that are changed are cached on your browser.

PageSpeed recommendations:

Caching headers should be set aggressively for all static resources.

The following settings are recommended for all cacheable resources:

Set Cache-Control: max-age or Expires should be set for minimum of one month, and if possible up to one year, in the future. It shouldn’t bet set to over a year in the future as it is against the RFC guidelines.

Set the Last-Modified date: It should be set up to that time when last time the resource was changed. Your browser won’t refetch it, if the Last-Modified date is sufficiently far enough in the past.

To enable caching vigorously use fingerprinting.

For the resources changing occasionally, you can have the browser cache the resource till it change son the server. This is the point the server will inform the browser about the availability of new version. To achieve this, embed a fingerprint of the resource in its URL (i.e. the file path).

Set the Vary header correctly for Internet Explorer

Resources whose Vary header contains fields that are not User-Agent, Accept-Encoding or Host aren’t cached by Internet Explorer. To cache these resources by IE, ensure to eliminate any other fields from the Vary header.

Minify JavaScript

Overview

When a JavaScript code is made compact, it helps in saving several bytes of data and speeding up parsing, downloading and execution time.

PageSpeed recommendations:

To minify a code means to eliminate unnecessary bytes such as lines breaks, extra spaces and indentation. There are several benefits of compressing a JavaScript code. First is that you don’t want external and inline JavaScript files to get cached so the small file size decreases the network latency acquired each time the page is downloaded. Second benefit is that it can further enhance external JS files’ compression along with HTML files in which the JS code is inlined. Third one is that web browsers can load and run the smaller files in a more rapid way.

There are several free tools like JSMin, Closure Compiler or the YUI Compressor to minify the JavaScript. A build process can be created as it used these tools to reduce and rename the development files and save them to a production directory. We recommend to minify the JS files of 4096 bytes or larger in size. Any file that can be reduced by 25 bytes or more will be benefitted (less than this won’t result in improving performance).

Minify CSS

Overview

When a CCS code is compacted, it can save several bytes of data and improve the parsing, downloading and execution time.

PageSpeed recommendations:

Benefits offered by minifying CSS are similar to those offered by minifying JavaScript: enhances compression, reduces network latency and speeds up browser loading and execution.

Remove duplicate JavaScript and CSS

Overview

Performance of your website is affected when there are duplicate JavaScript and CSS files on your website as they create redundant HTTP requests (IE only) and executing wasted JavaScript (IE and Firefox).

Details from Yahoo!

For IE users: If an external script is included twice and isn’t cacheable, two HTTP requests are generated during page loading. Though the script is cacheable, there will be extra HTTP requests when the user reloads the page.

In IE as well as Firefox, duplicate JavaScript consumes time in evaluating the same scripts more than once. It doesn’t matter whether the script is cacheable or not for this redundant script execution to happen.

YSlow recommendations:

Implementing a script management module in your templating system is the best way to avoid adding the same script twice accidently. Using the SCRIPT tag in your HTML page is a typical way to include a script.

<script type="text/javascript" src="menu_1.0.17.js"></script>

For PHP, an alternative would be to create a function called insertScript.

<?php insertScript("menu.js") ?>

Additionally, to avoid the same script insertion multiple times, this function can handle other scripting issues, such as checking the dependency and adding the version number to script filenames for supporting far future Expires headers.

Optimize images

Overview

Loading appropriately sized images helps reducing the page load time. This leads to:

- Reduces files sizes based on the place where image will be displayed.

- Resizes the image files themselves instead of using CSS.

- Save files in right format depending on usage

- Cost benefit ratio: high

- Access required.

What is optimizing images for the web?

Images created in Photoshop and Illustrator appear to be classy but their files size is quite large. The reason is the format of the images is such that manipulating them in different ways is easily possible.

When file sizes exceeds a couple of megabytes per image and if these files are put on your website, the load time would be very slow.

Image optimization for the web means saving or compiling images in a web friendly format based on the contents of the image.

Along with the pixels seen on the screen, the images also hold data. This data might increase the image size due to unnecessary size addition, leading to extensive page load times as the user waits for the image to get downloaded.

When thinking about cost versus benefit, image optimization needs to be the first priority in page speed optimizations if those aren’t optimized previously.

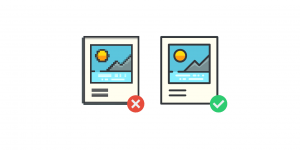

Working of Image Optimization

Image optimization in simple terms is defined as eliminating all the unimportant data saved within the image for reducing the file size based on where the image is being used on the website.

Total page load size can be reduced by up to 80% by optimizing images for the web.

Lossy and Lossless are the two types of compression which we need to understand.

There is a slight difference between the images saved in lossy format and the original one before compression. This is visible only when checks the image closely. Lossy compression is recommended for web as small amount of memory is used but can be sufficiently similar to the original image.

While the images saved in lossless format, maintain the complete information required for producing the original image. Due to this, these images carry large quantity of data and in return are a bigger file size.

Images can also be optimized for the web by saving them with appropriate dimensions. You can also resize the image on the webpage using CSS but this will enable the web browser to continue downloading the entire original file, then resize and display it.

Can you imagine using a poster size image as a thumbnail? Though the files size would be 20px by 20px, it will take the same time to load as the original poster. Instead we can just load a 20px image all the time.

Importance of Image Optimization

Since 90% of most websites are dependent on graphics, creating numerous image files, it is key reason behind image optimization being so important. If these images are left uncompressed and in wrong format, web page load times can severely slow down.

Ways to Optimize Your Images

There are wide varieties of images that you might need to deal with and so complete optimization of images can be an art that needs great practice.

Full optimization of images can be quite an art to perfect as there are such a wide variety of images you might be dealing with. Here are the most common ways to optimize your images for the web.

- There should be minimum white space around images – white space is used by some developers for padding which shouldn’t be used. Any whitespace can be removed by cropping the images and padding can be provided using CSS.

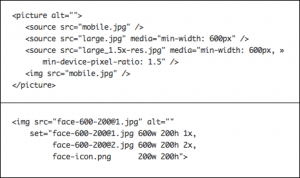

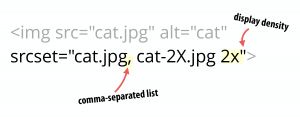

- When you need to cater all users for displays of different pixel density, serving high-resolution images seems to tempting. It is recommended to use the srcset attribute for compressing images to obtain minor file sizes as its browser support is quite good and if the browsers are delaying, they can take the help of Scott Jehl’s picturefill as below:

- Using proper file formats is a must. In case of icons, bullets or any graphics that have fewer colors, format such as GIF can be used and the file can be saved with less colors. For more details graphics, JPG file format can be used to save your images and reduce the quality.

- Specifying proper dimensions while saving an image is significant. When you want to resize an image only in the required dimensions, don’t use HTML or CSS formats, rather save the image in the given size for reducing the file size.

- Many times you need to display the same image in different sizes. This can be done by serving a single image resource and using HTML or CSS in the page containing image to scale it.

- Some form of program us required to resize your images. GIMP is a simple editing program that can be used for basic compression. If you require advanced optimization, you will need to save specific files in Photoshop, Fireworks or Illustrator.

- Image editor is the best option to scale images for matching the largest size required in your page and ensure those dimensions are specified in the page too.

Tools used by PageSpeed to test this recommendation

For JPEGs the tool used is libjpeg-turbo and for PNG/GIFs is OptiPNG.

PageSpeed recommends:

Choose an appropriate image file format.

Image type can drastically affect the file size. Below are some guidelines for image file format:

- If there are photographic style images, using JPEGs is recommended.

- Using BMPs or TIFFs formats should be avoided.

- When it comes to graphics, PNGs are most preferred format than GIFs. PNG format is support by IE 4.0b1+, Mac IE 5.0+, Opera 3.51+ and Netscape 4.04+ as well as all versions of Safari and Firefox. Alpha channel transparency partial transparency) isn’t supported by IE versions 4 to 6 but they support 256-color-or-less PNGs with 1-bit transparency (the same that is supported for GIFs). Alpha transparent PNGs are accepted by IE 7 and 8 only after applying alpha opacity filter to the element. Use “Indexed” mode rather than “RGB” mode for generating or converting suitable PNGs with GIMP. If maintaining compatibility with 3.x-level browsers is a must, serving an alternate GIF would be better to those browsers.

- For small or simple graphics and images containing animation (e.g. less than 10×10 pixels, or a color palette of less than 3 colors) use GIFs. If am image can be compressed well as a GIF, use it as a PNG and a GIF and select the smaller one.

Use an image compressor

There are several tools that can perform further, lossless compression on PNG and JPEG files without affecting the image quality. We recommend jpegtran or jpegoptim (available on Linux only; run with the –strip-all option) for JPEG and OptiPNG or PNGOUT for PNG.

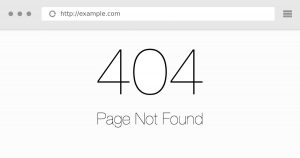

Avoid bad requests

Overview

Wasteful request can be avoided by removing “broken links” or requests resulting in 404/410 errors.

Details from Google

When the website changes with time, it’s expected that resources will be shifted or deleted. If your frontend code isn’t updated accordingly, the server will issue 404 “Not found” or 410 “Gone” responses. These requests are wasteful and unnecessary, leading to a bad user experience and make your site appear unprofessional. And if such requests are issued for resources that are enable to block browser processing subsequently, such as CSS or JS files, they can nearly lead to “crashing” of your site. In short, it is important to scan your site for such links with a link checking tool, such as Google Webmaster Tool’s crawl errors and fix them. For a long term, your application needs to have a way to update URL references whenever the location of resources is changed.

PageSpeed recommends:

Avoid using redirects to handle broken links.

Updating the links to resources that have moved or deleting those links if the resources have been removed needs to be done wherever possible. HTTP redirects shouldn’t be used to send users to the requested resources or to serve a substitute “suggestion” page. Remember, redirects are one of the reasons for website slow down and so should be avoided wherever possible.

Serve resources from a consistent URL

Overview

If you want to eliminate duplicate download bytes and additional RTTs, it’s better to serve a resource from a unique URL is required.

Details from Google

At times, referencing the same resource is essential from multiple pages in a page like in images. Also, you can share the same resources across multiple pages on a site suc as .js and .css files. If the same resource needs to be included on your web pages, it needs to be served from the consistent URL only. There are several benefits of a single URL when you ensure that one resource is always allocated. Reduction in overall payload size since the browser doesn’t download more copies of the same bytes.

Furthermore, most browsers will issue only one HTTP request for a single URL in one session, whether the resource is cacheable or not, so additional round-trip times are saved. Don’t forget to ensure that a different hostname isn’t serving the same resource for avoiding the performance penalty of additional DNS lookups.

Not that an absolute URL and a relative URL are constant if the hostname of the absolute URL is similar to that of the containing document. For example, if the page references – resource /images/example.gif and www.example.com/images/example.gif, the URLs are consistent. But if that page references – images/example.gif and mysite.example.com/images/example.gif, the URLs are not consistent.

PageSpeed recommends:

Serve shared resources from a consistent URL across all pages in a site

It is vital to ensure an identical URL is used by each reference to the same resource, for resources that are shared across multiple pages. If multiple pages/sites that link to each other share a resource but are hosted on different hostnames or domains, serving the file from a single hostname is better than to re-server it from each parent document’s hostname. In such cases, the caching benefits may overshadow the DNs lookup overhead. For example, the same JS file is used by both mysite.example.com and yoursite.example.com, and there is interlinking between them (which will require a DNS lookup anyway), it sounds sensible to simply server the JS file from mysite.example.com. In this manner, it is possible that the file is already present in the browser cache when the user visits yoursite.example.com.

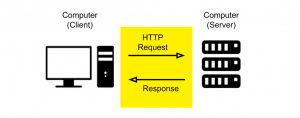

Make fewer HTTP requests

Overview

Combined files and CSS sprites can help in reducing HTTP requests.

Note: Http requests shouldn’t be more than 65 -70.

Details from Yahoo!

As the number of components on a page is decreased, the number of HTTP requests needed to render the page also gets reduced, leading to faster page loads.

YSlow recommends:

Some ways to reduce the number of components include:

- Combining files helps in reducing the number of components.

- Multiple scripts also can be combined into one script.

- Multiple CSS files can also be combined into one style sheet.

- CSS Sprites and image maps usage also affects the number of components.

Firstly, you can start reducing the number of HTTP requests in your page. This is one of the significant ways to improve performance for first time visitors. As per the blog post of Tenni Theurer – Browser Cache Usage – Exposed!, 40-60% of daily visitors to your site come in with an empty cache. It’s essential to improve your page speed for the first time visitors as it offers better user experience.

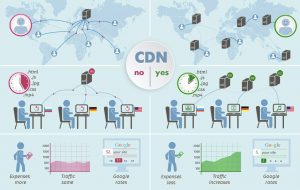

Use a Content Delivery Network (CDN)

Overview

With CDN, your users can experience equally fast web experience globally.

Details from Yahoo!

The closer the user is to your web server, the better is the response time. When your content is deployed across multiple and geographically dispersed servers, it increases your page load time as per the user.

YSlow recommendations:

A collection of web servers distributed across various locations for delivering content in a more efficient way to users is called content delivery network (CDN).

The selection of server is dependent on a measure of network proximity that is used to deliver content to a specific user.

For example, the server that offers rapid response time or the one with least network hops is chosen.

Having your own CDN is cost-effective and there are some big Internet giants such as Mirror Image Internet, Akamai Technologies and Limelight Networks that have their own CDN

The cost of a CDN could be too expensive for start-up companies or private websites but as the target audience increases and becomes high dispersive geographically, a CDN becomes essential for achieving fast response times.

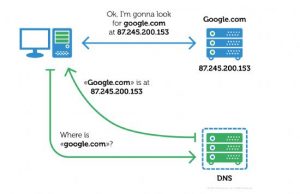

Reduce DNS lookups

Overview

DNS lookups are responsible for delaying the initial requests to a host. Also, when requests are made to a several different hosts, it ultimately affects the performance.

Details from Yahoo!

Similar to the mapping of people’s names to their phone numbers by phonebooks, the Domain Name System (DNS) also maps hostnames to the IP addresses. When you insert the URL www.yahoo.com is into the browser, a DNS resolver is contacted by the browser that returns the IP address of the server.

The typical time required by the DNS for looking up the IP address for a hostname is 20 to 120 milliseconds and yes it has a cost. Until the lookup is completed, nothing can be downloaded by the browser from the host.

YSlow recommends:

Caching DNS lookups gives better performance. The place where this caching occurs is a special caching server, maintained by the ISP or local area network of the user. But there is another caching that occurs on the individual computer of the user.

Almost every browser has its own cache which is different from the cache of the operating system. The operating system isn’t disturbed with a request for the record until the browser keeps a DNS record in its own cache.

The number of DNS lookups becomes equal to the number of unique hostnames in the web page, when the DNS cache of the client is empty (for both the browser and the operating system). This consists of the hostnames used I the URL of the page, script files, images, Flash objects, stylesheets, etc. As the number of unique hostnames reduces, the number of DNS lookups also reduces.

Decrease in the number of unique hostnames potentially decreases the quantity of parallel downloading done in the page. If DNS lookups are avoided, it affects the response times, but when parallel downloads is reduced, it increases the response times. Splitting these components across minimum two and maximum four hostnames is better. This helps in a good compromise between dropping the DNS lookups and enabling a high degree of parallel downloads.