In this post, we are discussing how to use and write reusable Terraform modules.

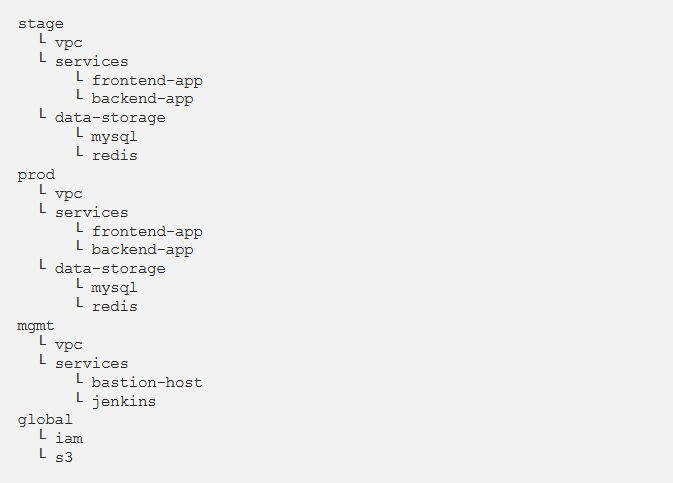

We recommend using the file layout for Terraform projects:

How do you avoid code redundancy during the use of such a file layout? For example, how do you avoid copying and pasting code for the same app installed in various environments, like stage/services/frontend-app and prod/services/frontend-app?

Generally, in the programming language, like Ruby, if you have copied and pasted the same code in multiple places, you have to put that code inside of a function and recall that function everywhere you want:

def example_function()

puts “Hello, World”

end

# Other places in your code

example_function()

With Terraform, you can write your code inside of a Terraform module and recall that module in many places everywhere your code. Instead of having the same code copied/pasted in the staging and production environments, you’ll be able to have both environments recall code from the same module.

This can be a big deal. Modules are usually an important element to be able to write reusable, maintainable, and testable Terraform code. When you start utilising them, there is no moving back.

- You can start developing everything as a module.

- Developing a library of modules to share within your organisation.

- Start using modules you find online.

- Start thinking of your whole infrastructure as a set of reusable modules.

In this post, we’ll explain to you how to use Terraform modules by including the topics as follows:

- Module basics

- Module inputs

- Module outputs

- Versioned modules

1. Module basics

The Terraform module is very easy: any set of Terraform configuration files in a folder is a module (the module in the present working directory is named the root module). To understand what modules can manage, you have to utilise one module from another module.

Instead of copying and pasting the code all across the place, you can convert it into a reusable module by placing it in a folder.

Create a new folder named modules and transfer all the files (i.e., main.tf, variables.tf, and outputs.tf) from the webserver cluster into modules/services/webserver-cluster. Open the main.tf file in modules/services/webserver-cluster and remove the particular provider definition. Providers have to be configured by the user of the module and not with the module itself.

Now you can make use of this module in, for example, your staging environment. The syntax for using a module is:

source = “<SOURCE>” [CONFIG …]

}

NAME is an identifier that you may use throughout the Terraform code to specify to this module (e. g., web-service).

SOURCE is the path where the module code is available (e. g., modules/services/webserver-cluster).

CONFIG contains one or more arguments that are definite to that module (e. g., num_servers = 5).

For example, you can create a new file in stage/services/webserver-cluster/main.tf and use the webserver-cluster module in it as mentioned below:

provider “aws” {

region = “us-east-2”

}

module “webserver_cluster” {

source = “../../../modules/services/webserver-cluster”

}

You can then recall the specific same module in the production environment by building a new prod/services/webserver-cluster/main.tf file with the contents as follows:

provider ” digitalocean” {

token = “${var.do_token}

}

module “webserver_cluster” {

source = “../../../modules/services/webserver-cluster”

}

And there you have that: code reuse in various environments with minimal copy/paste! Note that whenever you add a module to your current Terraform configurations or change the source parameter of a module, you have to run the init command before you run plan or apply:

$ terraform init

Initializing modules…

– webserver_cluster in ../../../modules/services/webserver-cluster

Initializing the backend…

Initializing provider plugins…

Terraform has been successfully initialized!

You now have seen all the tricks the init command has up its sleeve. It downloads providers, modules, and configures your backends, all in one useful command.

Before you run the apply command on this code, you should take note of that there can be an issue with the webserver-cluster module: all the names are hard-coded. That is, the name of the security groups, CLB, and additional resources are all hard-coded, so if you utilise this module more than once, you’ll get name conflict errors. To fix these issues, you have to add configurable data to the webserver-cluster module so it can act differently in various environments.

2. Module inputs

You can add input parameters to that function to get configurable in a general-purpose programming language like Ruby:

def example_function(param1, param2)

puts “Hello, #{param1} #{param2}”

end

# Other places in your code

example_function(“foo”, “bar”)

In Terraform, modules also can have input parameters. You use a tool you’re already familiar with ‘input variables’ to define them. Open modules/services/webserver-cluster/variables.tf and add a new input variable:

variable “cluster_name” {

description = “The name to use for all the cluster resources”

type = string

}

Next, check modules/services/webserver-cluster/main.tf and use var.cluster_name alternatively hard-coded names (e. g., alternatively of “terraform-asg-example“). For example, this is how you do it for the CLB security group:

resource “aws_security_group” “elb” {

name = “${var.cluster_name}-elb”

ingress {

from_port = 80

to_port = 80

protocol = “tcp”

cidr_blocks = [“0.0.0.0/0”]

}

egress {

from_port = 0

to_port = 0

protocol = “-1”

cidr_blocks = [“0.0.0.0/0”]

}

}

See how the name parameter is set to “${var.cluster_name}-elb”. You will have to make a related change to the other aws_security_group resource (e.g., give it the name “${var.cluster_name}-instance”), the aws_elb resource, and the tag section of the aws_autoscaling_group resource.

Presently, in the staging environment, in stage/services/webserver-cluster/main.tf, you can set the new input variable likewise:

module “webserver_cluster” {

source = “../../../modules/services/webserver-cluster”

cluster_name = “webservers-stage”

}

You have to do the same in the production environment in prod/services/webserver-cluster/main.tf:

module “webserver_cluster” {

source = “../../../modules/services/webserver-cluster”

cluster_name = “webservers-prod”

}

As you can see, you set input variables to get a module using the same syntax as setting arguments for the resource. The particular input variables are the API of the module, checking how it will act in different environments. This example applies various names in different environments, but you may also need to make other parameters configurable. For example, within staging, you may need to run the small webserver cluster to save money, but within production, you may need to run the larger cluster to manage plenty of traffic. To perform that, you can add three additional input variables to modules/services/webserver-cluster/variables.tf:

variable “instance_type” {

description = “The type of EC2 Instances to run (e.g. t2.micro)”

type = string

}

variable “min_size” {

description = “The minimum number of EC2 Instances in the ASG”

type = number

}

variable “max_size” {

description = “The maximum number of EC2 Instances in the ASG”

type = number

}

Next, update the launch configuration in modules/services/webserver-cluster/main.tf to set its instance_type parameter to the new var.instance_type input variable:

resource “aws_launch_configuration” “example” {

image_id = “ami-0c55b159cbfafe1f0”

instance_type = var.instance_type

# (…)

}

Also, you have to update the ASG definition in the same file to set its min_size and max_size parameters to the new var.min_size and var.max_size input variables:

resource “aws_autoscaling_group” “example” {

launch_configuration = aws_launch_configuration.example.id

availability_zones = data.aws_availability_zones.all.names

min_size = var.min_size

max_size = var.max_size

# (…)

}

Now, in the staging environment (stage/services/webserver-cluster/main.tf), you can keep the cluster small and inexpensive by setting instance_type to t2.micro and min_size and max_size to 2:

module “webserver_cluster” {

source = “../../../modules/services/webserver-cluster”

cluster_name = “webservers-stage”

instance_type = “t2.micro”

min_size = 2

max_size = 2

}

Alternatively, in the production environment, you can utilise a bigger instance_type with more CPU and memory like m4.large (Note: this instance type is not part of the AWS free tier, so in case you’re simply using this for learning and do not want to be able to be charged, stick along with t2. micro for the instance_type), and you may set max_size to 10 to let the cluster to reduce or increase according to the load (don’t bother, in the beginning, the cluster will launch with two instances):

source = “../../../modules/services/webserver-cluster”cluster_name = “webservers-prod”

instance_type = “m4.large”

min_size = 2

max_size = 10

}

3. Module Outputs

A strong feature of Auto Scaling Groups is that you can configure them to increase or decrease the number of servers you have utilised in response to load. One approach to perform this is by using an auto scaling schedule that may change the size of the cluster at a scheduled time throughout the day. For example, if traffic to your cluster is increased during the regular business hours, you should use an auto scaling schedule to extend the number of servers at 9 a. m. and reduce it at 5 p. m.

If you specify the auto scaling schedule in the webserver-cluster module, it would be used to both staging and production. As you do not require doing this sort of scaling within your staging environment, for the time being, you can specify the auto scaling schedule directly in the production configurations.

resource “aws_autoscaling_schedule” “scale_out_business_hours” {

scheduled_action_name = “scale-out-during-business-hours”

min_size = 2

max_size = 10

desired_capacity = 10

recurrence = “0 9 * * *”

}

resource “aws_autoscaling_schedule” “scale_in_at_night” {

scheduled_action_name = “scale-in-at-night”

min_size = 2

max_size = 10

desired_capacity = 2

recurrence = “0 17 * * *”

}

This code uses one aws_autoscaling_schedule resource to extend the number of servers to 10 while the morning hours (the recurrence parameter utilises cron syntax, so “0 9 * * *” implies “9 a. m. daily”) and the other aws_autoscaling_schedule resource to reduce the number of servers at night (“0 17 * * *” implies “5 p. m. daily”). Still, both usages of aws_autoscaling_schedule are missing a required parameter, autoscaling_group_name, which defines the name of the ASG. The ASG itself is specified within the webserver-cluster module, so how do you access its name? In a general-purpose programming language, like Ruby, functions can return values:

def example_function(param1, param2)

return “Hello, #{param1} #{param2}”

end

# Other places in your code

return_value = example_function(“foo”, “bar”)

In Terraform, a module may also return values. Again, this is performed using a system you are already aware: output variables. You can add the ASG name as an output variable in /modules/services/webserver-cluster/outputs.tf as mention below:

output “asg_name” {

value = aws_autoscaling_group.example.name

description = “The name of the Auto Scaling Group”

}

You may access module output variables in a similar way as resource output attributes. Following is the syntax:

module.<MODULE_NAME>.<OUTPUT_NAME>

For example:

module.frontend.asg_name

You can use this syntax in prod/services/webserver-cluster/main.tf to set the autoscaling_group_name parameter in any of the aws_autoscaling_schedule resources:

resource “aws_autoscaling_schedule” “scale_out_business_hours” {

scheduled_action_name = “scale-out-during-business-hours”

min_size = 2

max_size = 10

desired_capacity = 10

recurrence = “0 9 * * *”

autoscaling_group_name = module.webserver_cluster.asg_name

}

resource “aws_autoscaling_schedule” “scale_in_at_night” {

scheduled_action_name = “scale-in-at-night”

min_size = 2

max_size = 10

desired_capacity = 2

recurrence = “0 17 * * *”

autoscaling_group_name = module.webserver_cluster.asg_name

}

You may need to show one other output in the webserver-cluster module: the DNS name of the CLB, hence, you understand what URL to test when the cluster is deployed. You again add an output variable in /modules/services/webserver-cluster/outputs.tf to execute that:

output “clb_dns_name” {

value = aws_elb.example.dns_name

description = “The domain name of the load balancer”

}

You can then “go through” this output in stage/services/webserver-cluster/outputs.tf and prod/services/webserver-cluster/outputs.tf as follows:

output “clb_dns_name” {

value = module.webserver_cluster.clb_dns_name

description = “The domain name of the load balancer”

}

4. Versioned modules

If both your staging and production environments are directing to the same module directory, then as soon as you create a modification in that folder, it will affect both environments on the same next deployment. This sort of coupling can make it tougher to test a change in staging without any possibility of affecting production. A better approach is always to create versioned modules to enable you to use one version in staging (e.g., v0.0.2) and another version in production (e. g., v0. 0. 1).

Out of all module examples you’ve noticed so far, whenever you used a module, you have set the source parameter of the module to a local file path. Also, to file paths, Terraform supports other sorts of module sources, like Git URLs, Mercurial URLs and optional HTTP URLs. The best way to create a versioned module would be to place the code for the module in a separate Git repository and to set the source parameter to that repository’s URL. Which means your Terraform code is going to be spread out over (at least) two repositories:

- modules: This repo specifies reusable modules. Think of each module as a “blueprint” that describes an exact part of your infrastructure.

- live: This repo specifies the existing infrastructure you’re running in each environment such as stage, prod, mgmt, etc. Assume of this as the “houses” you created from the “blueprints” in the modules repo.

The modified folder structure for your Terraform code will now look something like this:

To create this folder structure, initially, you would have to move the stage, prod, and global folders into the folder called live. Next, configure the live and modules folders as single git repositories. Following is an example of how to do that for the modules folder:

$ cd modules

$ git init

$ git add .

$ git commit -m “Initial commit of modules repo”

$ git remote add origin “(URL OF REMOTE GIT REPOSITORY)”

$ git push origin master

To use as a version number, you can add a tag to the modules repo. You can also use the GitHub UI to build a release if you’re using GitHub, which will create a tag under the hood. If you are not using GitHub, you can use the Git CLI:

$ git tag -a “v0.0.1” -m “First release of webserver-cluster module”

$ git push ‐‐follow-tags

You can now use this versioned module in both staging and production by defining a Git URL in the source parameter. If your modules repo was in the GitHub repo github.com/foo/modules, this is what that might look like in live/stage/services/webserver-cluster/main.tf.

Note: The double-slash in the Git URL is required as follows:

module “webserver_cluster” {

source = “github.com/foo/modules//webserver-cluster?ref=v0.0.1”

cluster_name = “webservers-stage”

instance_type = “t2.micro”

min_size = 2

max_size = 2

}

The ref parameter permits you to define a particular Git submit using its sha1 hash, a branch name, or, as in this case, a particular Git tag. I usually suggest using Git tags as version numbers for modules. Branch names are not steady, as you always get the most recent commit on a branch, which can change each time you run the init command and the sha1 hashes are not much user-friendly. Git tags are as steady as a commit (actually, a tag is only a pointer to a commit) however they enable you to utilise a friendly, readable name.

As you’ve updated your Terraform code to use a versioned module URL, you require informing Terraform to download the module code by re-running terraform init:

Initializing modules…

Downloading github.com/foo/modules?ref=v0.0.2

– webserver_cluster in .terraform/modules/services/webserver-cluster(…)

You now can see that Terraform downloads the module code from Git as alternatively your local filesystem. You can run the apply command usually, once the module code has been downloaded.

Note: If your Terraform module is in a private Git repository, to use that repo as a module source, you should give Terraform an approach to validate to that Git storehouse. I suggest using SSH auth so that you don’t need to hard-code the credentials for your repo in the code itself. For example, with GitHub, an SSH source URL have to be of the form:

source = “git::[email protected]:<OWNER>/<REPO>.git//<PATH>?ref=<VERSION>”

For example:

source = “git::[email protected]:gruntwork-io/terraform-google-gke.git//modules/gke-cluster?ref=v0.1.2”

Now that you’re using versioned modules, let’s step into the way of making modifications. Suppose you made few changes to the webserver-cluster module and you require to test them out in staging. First, you needed to commit those changes to the modules repo:

$ cd modules

$ git add .

$ git commit -m “Made some changes to webserver-cluster”

$ git push origin master

After that, you will create a new tag in the modules repo:

$ git tag -a “v0.0.2” -m “Second release of webserver-cluster”

$ git push ‐‐follow-tags

And now you can update only the source URL applied in the staging environment (live/stage/services/webserver-cluster/main.tf) to use this latest version:

module “webserver_cluster” {

source = “github.com/foo/modules//webserver-cluster?ref=v0.0.2”

cluster_name = “webservers-stage”

instance_type = “t2.micro”

min_size = 2

max_size = 2

}

In production (live/prod/services/webserver-cluster/main.tf), you can cheerfully keep on running v0.0.1 unchanged:

module “webserver_cluster” {

source = “github.com/foo/modules//webserver-cluster?ref=v0.0.1”

cluster_name = “webservers-prod”

instance_type = “m4.large”

min_size = 2

max_size = 10

}

Once v0.0.2 has been wholly tested and proven in staging, you can then update production. In any case, if there ends up being a bug in v0.0.2 no big deal, as it does not affect the genuine users of your production environment. Fix the bug, deliver a new version, and repeat the entire process until you have something stable enough for production.

Conclusion

By specifying infrastructure-as-code in modules, you can use a type of coding best-practices to your infrastructure. You get to invest your time building modules instead of manually deploying code; you can validate each change to a module via code reviews and automated tests; you can create versioned releases of each module and document the changes in every release, and you can securely evaluate different versions of a module in different environments and turn back to previous versions, if you hit a problem.

All of this can dramatically increase your ability to build infrastructure quickly and reliably. You can sum up complicated pieces of infrastructure behind simple APIs that can be used again all over your tech stack.